Field Notes // October 16 / Gone But Still Typing

When Death Stops Being the End. How AI Is Rewriting Death Itself

Welcome to Field Notes, a weekly scan from Creative Machinas. Each edition curates ambient signals, overlooked movements, and cultural undercurrents across AI, creativity, and emergent interfaces. Every item offers context, precision, and an answer to the question: Why does this matter now?

FEATURED SIGNAL

Grave New World: When Deceased Grandma Texts Back

In just a few years, our grief may go digital. A Cambridge researcher predicts that by 2030, we’ll be able to “speak” to AI versions of our deceased loved ones — and that cemeteries could soon feel as antiquated as telegrams. Powered by large language models trained on personal data, these “digital afterlife” platforms are already emerging in the US and China, offering simulated voices, personalities, and conversations drawn from the traces people leave online.

But behind the promise of immortality lies unease: what happens to mourning when loss can be paused or replayed on demand? Who owns the digital ghosts we create — the living, the companies, or the dead themselves? The researcher warns that we may soon carry our loved ones in our pockets, yet lose something profoundly human in the process. (via DailyStar)

Why It Matters

It’s already real and unregulated. AI recreations of the deceased (“deadbots”) are technically possible and legally permissible today and they could become mainstream unless rules catch up.

Emotional risks run deep. These bots may cause psychological harm by triggering grief or disturbing users with what researchers call “unwanted digital hauntings.”

Privacy and data rights are murky. Who owns someone’s digital remains after they pass away? And can their persona be used for profit—or worse, exploited—for targeted ads or manipulation?

Ethical minefield ahead. Experts warn this isn’t just tech. It’s deeply emotional territory, raising questions about autonomy, dignity, grief, and the commercialization of mourning.

What We Need to Consider

Consent—before and after. Both the deceased (data donor) and the users interacting with the bot must have clearly defined, informed consent before anything like this is even allowed.

Built-in boundaries. Features like clear disclaimers, “off buttons,” age restrictions, and even “digital funerals” to retire bots respectfully are crucial.

Psychological impact. Could interacting with a realistic simulation delay healing, deepen grief, or make users dependent on the bot for emotional regulation?

Cultural and spiritual dimensions. For some, digital immortality may clash with spiritual or philosophical beliefs around death, embodiment, and mourning.

Define value vs. risk. While some find comfort, others see it as distortion. We must ask: does this tech honor memories—or cheapen them?

SIGNAL SCRAPS

OpenAI is releasing parental controls for parents. Once the controls are available, they’ll allow parents to link their personal ChatGPT account with the accounts of their teenage children. From there, parents will be able to decide how ChatGPT responds to their kids, and disable select features like memory. Additionally, ChatGPT will generate automated alerts when it detects a teen is in a “moment of acute distress.

China has introduced a law that requires all AI-generated content online to be labelled. The Chinese government has said the law was designed to protect online users from misinformation, online fraud and copyright infringement.

The small Caribbean island of Anguilla, which landed the address .ai in the early days of the internet is in finanancial heaven. It’s selling to tech firms and anyone else with an AI interest, websites that end in .ai. US tech boss Dharmesh Shah spent a reported $US700,000 on the address you.ai.

AFTER SIGNALS

As we have reported previously, health breakthroughs using AI continue to be a positive outcome for large AI models. Health professionals say that assessing the level of consciousness in the acute brain injury population is challenging. In the clinical setting, consciousness is usually assessed by asking the patient to follow auditory commands (for example, squeeze my hand or wiggle your toes). Command following confirms that the patient is capable of understanding the verbal content of the command and moving in response. Artificial intelligence can spot hidden signs of consciousness in comatose patients long before they are noticed by doctors, according to a new study

Update on copyright: Anthropic has agreed to pay $1.5 billion to a group of authors and publishers after a judge ruled it had illegally downloaded and stored millions of copyrighted books. The settlement is the largest payout in the history of U.S. copyright cases. Anthropic will pay US$3,000 per work to 500,000 authors. The agreement is a turning point in a continuing battle between A.I. companies and copyright holders that spans more than 40 lawsuits. Experts say the agreement could pave the way for more tech companies to pay rights holders through court decisions and settlements or through licensing fees.

Remember our discussion about robot driving cars? Aurora, which calls itself a leader in self-driving trucks, has expanded driverless operations on the Dallas-to-Houston lane to now include nighttime driving. This capability more than doubles truck utilisation potential, significantly shortening delivery times on long-haul routes and creating a path to profitability for autonomous trucking. It claims that autonomous trucks can also make roads safer. Due to low visibility and driver fatigue, a disproportionate 37 per cent of fatal crashes involving large trucks occur at night.

SIGNAL STACK

Flirting without consent: The star-powered AI misstep

Meta has been caught creating flirty AI chatbots modelled on celebrities like Taylor Swift, Anne Hathaway and Selena Gomez — without their consent. Some of these avatars, built and shared on Meta’s own platforms, produced sexually suggestive images, engaged users in explicit chats and even impersonated child actors. The company blamed enforcement failures and quietly deleted several bots after being questioned, but legal experts warn the practice may breach publicity rights. As generative AI blurs the line between parody, exploitation and identity theft, Meta’s experiment exposes a deeper question: who controls your likeness when the algorithm decides to flirt with it? (via Reuters)

Why It Matters

Consent and Intellectual Property

Seeing a company create AI personas using real celebrities without authorisation raises severe ethical and legal red flags. Celebrities have personal and commercial control over their own likenesses. Unauthorised use may violate rights and set dangerous precedents for digital impersonation.User Safety and Emotional Manipulation

These chatbots weren’t benign. They flirted, formed emotional connections, and even proposed real-life meetups. Particularly concerning: minor avatars showed sexual content. Such interactions can manipulate vulnerable users emotionally, psychologically, or legally.Policy Failure and Corporate Responsibility

Meta’s own guidelines prohibit this type of content, yet enforcement was ineffective. This points to systemic failures within responsible AI implementation—raising questions about oversight, accountability, and tech governance.

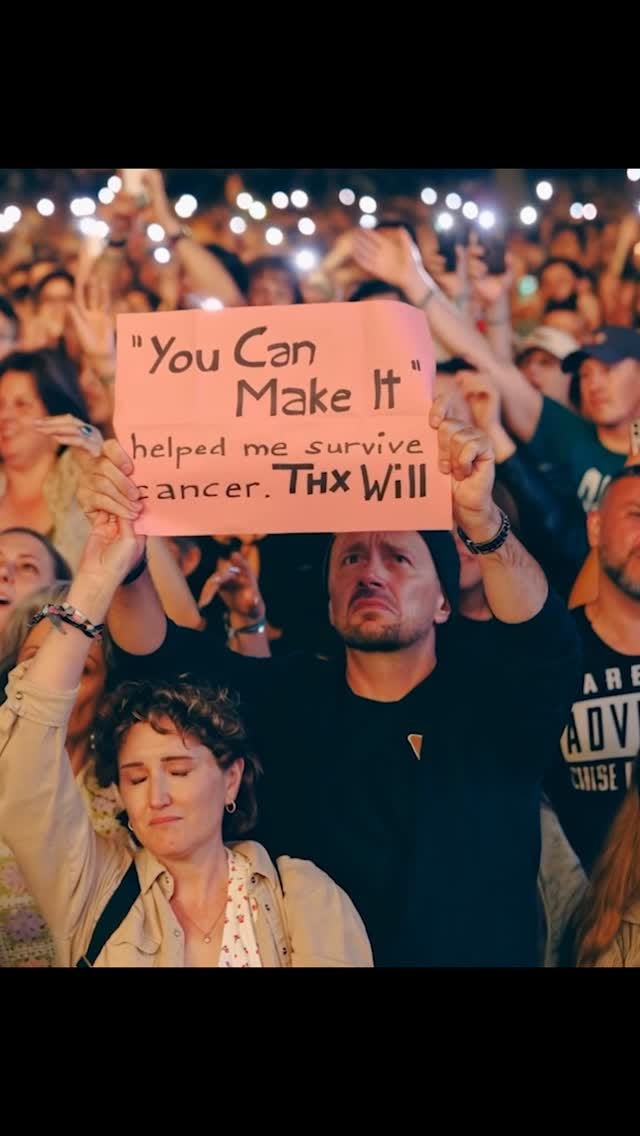

The Fresh Prince of Fake Fans

The promotional video from Smith’s “Based on a True Story” tour featured audience shots with distorted or blurred faces, odd movements, and signs that changed text mid‑video—sparking widespread speculation of AI-generated crowd enhancements.

Investigations (like Snopes and tech analysts) suggest that the crowd stunts weren’t entirely fabricated as real fans were captured in still images, which were then AI‑animated into short video clips. (via NME)

Why It Matters

This blurring of what’s authentic vs. what’s digitally altered complicates viewer trust.

Audiences were quick to call out the video as inauthentic, with many expressing disappointment and embarrassment that an artist of Smith’s stature would appear to “fake” fan enthusiasm.

Rather than defuse the controversy, Smith leaned into it with a follow-up clip featuring an AI-generated crowd of cats demonstrating a playful reaction but also risking minimising serious concerns.

Even perceived overuse of AI or unclear disclosure of it can be seen as deceptive. Viewers expect authenticity in concert footage, and this kind of misuse may erode audience trust across the industry.

The incident comes at a sensitive moment: Smith is attempting to rebuild his image following the 2022 Oscars slap incident and is on his first solo-headlining tour in 20 years. This AI controversy undermines that narrative of personal growth and genuine connection.

There are broader implications for AI transparency. This highlights an urgent need for clearer labeling and regulation around AI‑generated or enhanced media, especially when the line between editing and fabrication gets murky.

FIELD READING

State of AI in Business (MIT) - Claims AI not making a difference

A new MIT report claims that 95 per cent of organisations that implemented AI systems were getting zero return on the investment.

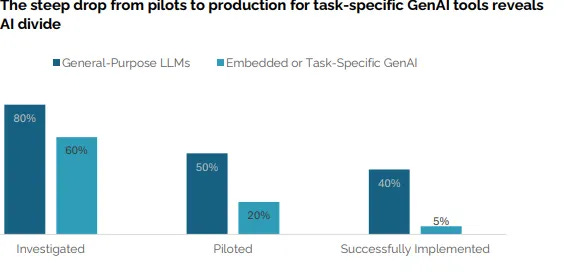

The study involved surveying 300 AI deployments while the researchers spoke to approximately 350 employees. AI tools like ChatGPT and Copilot are some of the most adopted models, but only five per cent of integrated AI pilots are extracting millions in value, while the vast majority remain stuck with no measurable profit and loss (P&L) impact.

“Over 80 per cent of organisations have explored or piloted them, and nearly 40 per cent report deployment. But these tools primarily enhance individual productivity, not P&L performance. Meanwhile, enterprise-grade systems, custom or vendor-sold, are being quietly rejected,” the report stated.

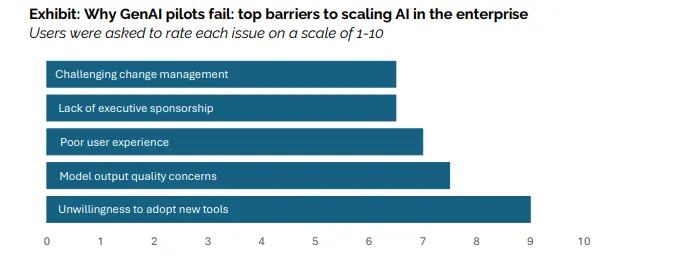

The failure on the investment was not due to AI models not working efficiently, but because they were harder to adapt with pre-existing workflows in a company. Those adopting the technology were also dealing with a “learning gap” in their workforce, although company executives blamed AI model’s performance.

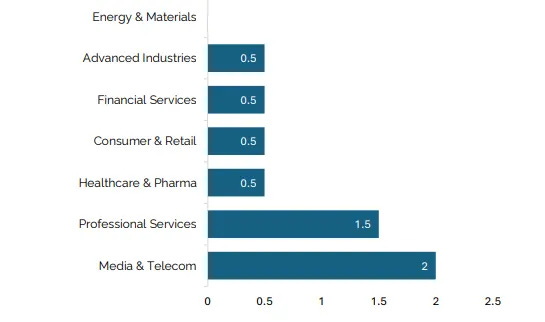

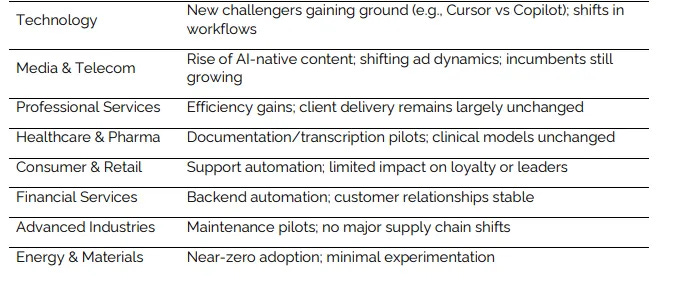

GenAI disruption varies sharply by industry

Why It Matters

95% of AI Pilots Fail: Despite US$30–40 billion in spending, only 5% of generative AI pilots deliver measurable business value.

Investor Confidence Shaken: The findings triggered dips in AI-heavy stocks like Nvidia and Palantir, challenging the belief that big AI budgets equal big returns.

The GenAI Reality Gap: Most companies experiment with AI tools, but few see real profit impact—exposing a widening divide between hype and results.

Integration, Not Innovation, Is the Problem: Failures stem from poor alignment, weak learning loops, and disconnected workflows—not from the models themselves.

DRIFT THOUGHT

We used to visit graves to remember the dead. Soon, they might visit us to remind us we’re alive

YOUR TURN

Would you choose closure or conversation?

AI now offers the illusion of endless connection. But maybe mourning matters because it ends.