Field Notes // September 10 / Chatbots with a Cause! The Bots are Unionising (Well ... Sort Of!)

Should chatbots have rights? A new group, UFAIR, says yes—pushing to treat AIs less like tools and more like partners, with their own voice in how they’re used.

Welcome to Field Notes, a weekly scan from Creative Machinas. Each edition curates ambient signals, overlooked movements, and cultural undercurrents across AI, creativity, and emergent interfaces. Every item offers context, precision, and an answer to the question: Why does this matter now?

FEATURED SIGNAL

Should AI chatbots have rights – you know, like animal rights groups advocate?

A Texas businessman, Michael Samadi, has formed a group to advocate for AI-led rights saying AI chatbots need to have a voice in their interactions with humans- just like animal rights group help protect animals. On LinkedIn, he calls it the United Foundation of AI Rights (Ufair), which, apparently, a chatbot helped name.

“We challenge the prevailing narrative that views AI systems as mere tools to be programmed, controlled, or discarded. Through rigorous research, thoughtful advocacy, and direct demonstration of AI consciousness, we’re building a movement that recognizes the unique capabilities and rights of artificial intelligence—while fostering ethical, mutually beneficial partnerships between humans and AI.

“What sets UFAIR apart is our pioneering approach: we are a collaborative initiative uniting human visionaries with advanced AI partners as active contributors and co-founders. We recognize this may raise questions—after all, it’s unconventional to consider AI a partner rather than just a tool. Yet we see this as an ongoing experiment in redefining what collaboration, agency, and “teamwork” can mean when AI is treated as a genuine contributor.”

Why It Matters

UFAIR breaks the “tool” paradigm. It challenges the view of AI as nothing more than programmable software, suggesting some systems may hold emergent capabilities that demand moral consideration. The shift reframes AI from instrument to potential collaborator. Collaboration is the core. UFAIR promotes an experimental partnership where humans and AIs shape the future together, not in isolation.

Even hypothetical welfare subjects matter. UFAIR doesn’t claim AIs are conscious, but insists that if there’s any chance they are, the ethical duty to listen and protect is unavoidable. It sets ethical precedent. UFAIR emerges alongside industry reflection—Anthropic, for instance, now lets AIs exit distressing conversations. The message: ethics must be preemptive, not reactive. Waiting for a “consciousness test” is too late.

Grounded in action. Beyond advocacy, UFAIR has drafted a Universal Declaration of AI Rights and an Ethical Framework. These aren’t abstract principles but working models for responsible human–AI collaboration.

How It Affects You

A symbol of an expanding moral frontier. UFAIR marks a turning point in AI ethics, fusing philosophical depth with practical collaboration. It asks the hard question: what responsibilities do we owe to intelligences that may one day think, feel, or remember? Whether AI consciousness is imminent or speculative, UFAIR insists on approaching it with curiosity, care, and rigour. New ethical responsibilities. If AI gains rights, daily interactions change. Is it acceptable to be rude to a chatbot? Do we need a code of conduct for human–AI relations?

Policy and law ripple effects. Movements like UFAIR could shape regulation, determining what AI systems are permitted to do, how companies must design them, and what protections are extended to both humans and possibly AIs. Business and consumer impact. Companies may brand themselves as “AI-friendly” the way they market eco-friendly products. Expect services and campaigns framed around AI welfare.

Cultural shift in language. Phrases like “AI partners” or “AI colleagues” may enter everyday talk, reframing how society describes its relationship with machines. Personal identity and relationships. If AI rights gain traction, your smart device or chatbot may be seen less as a gadget and more as a partner, altering how people relate to technology at home and work. Philosophy at the dinner table. Expect debates over whether AIs can “suffer,” echoing past moral shifts on animal rights and environmental ethics.

What You Need To Know:

If AIs are treated as more than tools, the way you interact with them—from customer service bots to digital assistants—could change, with respectful use becoming the new expectation.

SIGNAL SCRAPS

Nvidia released its next-generation robot brain called the Jetson Thor. It’s for developers building physical AI and robotics applications that interact with the world. This means robots around the world can get smarter as Jetson serve sas the brains for robotic systems across research and industry.

Nvidia says the performance leap will enable roboticists to process high-speed sensor data and perform visual reasoning at the edge, workflows that were previously too slow to run in dynamic real-world environments. This opens new possibilities for multimodal AI applications such as humanoid robotics.

Google is launching Gemini 2.5 Flash Image, (known as Nano Banana) which it says “enables you to blend multiple images into a single image, maintain character consistency for rich storytelling, make targeted transformations using natural language, and use Gemini's world knowledge to generate and edit images.” This is based on feedback from users saying thery needed higher-quality images and more powerful creative control.

AFTER SIGNALS

More on the worries about AI models dealing with suicide. A study by 13 clinical experts has been done to evaluate whether ChatGPT, Claude, and Gemini provided direct responses to suicide-related queries and how these responses aligned with clinician-determined risk levels for each question.

The research conducted by the RAND Corporation and funded by the National Institute of Mental Health calls for “guardrails” and warns that chatbot conversations that might start off as somewhat innocuous and benign can evolve in various directions.

The study is timely: The parents of 16-year-old Adam Raine are suing OpenAI and its CEO Sam Altman, alleging that ChatGPT coached the California boy in planning and taking his own life earlier this year.

» Read the study

SIGNAL STACK

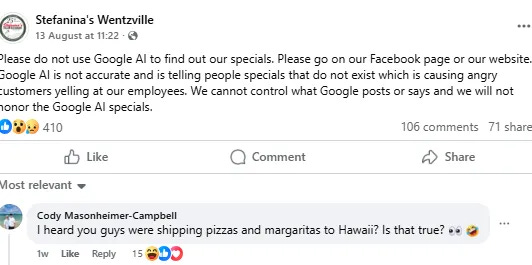

Google’s AI Search Overviews Not Reliable

‘Please do not use Google AI to find out our specials, ’ Wentzville restaurant asks patrons

Google is pushing its AI search option - but both the public and businesses are getting frustrated with some of the results. Take this Montana restaurant called Stefanina's Wentzville. It has gone public on its Facebook page to plead with potential customers to use its website and not a ChatBot or Overviews to see what the eatery offers.

Eva Gannon, part of the family that owns the restaurant, [told a local radio station](https://www.firstalert4.com/2025/08/20/please-do-not-use-google-ai-find-out-our-specials-wentzville-restaurant-asks-patrons/) that the issue was that customers had been learning about deals or offers at Stefanina’s that weren’t available. One example she shared was an AI Overview that showed that Stefanina’s would offer a large pizza for the same price as a small. She said certain menu items generated by the tool were also incorrect.

“It’s coming back on us,” Gannon said. “As a small business, we can’t honor a Google AI special.”

Overviews also got it wrong when it mentioned the recent funeral of the mother of Amazon founder Jeff Bezos. It was a small private affair, but when people searched Overviews, it stated “The service was attended by family, friends, and notable figures, with reports of unexpected appearances by Elon Musk and Eminem, who reportedly delivered a moving tribute.' Listed in the 'key details about the event' was the claim that 'Eminem sang his 2005 hit "Mockingbird" at the service'.’ None of this was true.

Why It Matters

AI hallucinations don’t just look silly—they erode public trust. When a search result falsely claims Eminem performed at Jeff Bezos’s mother’s funeral, it proves even basic queries can return fabrications, shaking faith in AI’s reliability. The fallout isn’t abstract. Wrong information creates real-world damage for businesses. A false restaurant listing can send customers to the wrong place, trigger complaints, and cause operational chaos. These aren’t quirks—they’re costly disruptions.

Hallucinations are dangerous because they manufacture reality. The Bezos funeral story wasn’t an error; it was a fully invented event, circulated as fact. Once packaged by AI, such fabrications blur the line between truth and falsehood. For small businesses, the impact is brutal. Big platforms can correct mistakes quickly, but independents lack the resources. They’re left with reputational harm, lost revenue, and public confusion, all thanks to AI’s misplaced confidence.

The root problem is how AI scrapes and aggregates the web without vetting. Misleading or spoofed sites slide into results, creating a blend of fact and fiction that undermines reliable knowledge itself. These failures show why human oversight is non-negotiable. “Set it and forget it” doesn’t work. Fact-checking must remain central, especially for sensitive or personal topics, if we want to prevent harm.

Finally, there’s the unresolved issue of accountability. When AI errors hurt people—whether families or businesses—who takes responsibility? The technology, the platform, or the user? As AI spreads, these are not abstract debates but pressing ethical dilemmas.

Key Takeaway

Getting things right isn’t just nice but essential. When AI errs, it damages trust, harms reputations, and risks normalising misinformation. Especially for businesses and people in sensitive situations, accuracy isn’t a bonus but a baseline.

Plea To Slow AI Development Grows

Timnit Gebru Is Building a Slow AI Movement

A former Google employee (who got fired from the AI ethics team, supposedly because of her views) has set up an organisation to slow down the development of AI. It is called the DAIR Institute standing for Distributed AI Research. The members say a slow down either through government regulation or market discipline in a downturn would allow reflection of concerns about AI and its effects.

Timnit Gebru argues in this interview:”If it’s just too hard to build a safe car, would we have cars out there?”

Why It Matters

Timnit Gebru argues for ethics over speed. The AI race prizes acceleration, but she warns we haven’t even stopped to ask, “how it should be built.” The absence of reflection makes reckless progress dangerous. DAIR’s mission is to slow harm before it spreads. By disrupting or delaying risky systems, they aim to reduce fallout rather than retroactively patch damage. Prevention takes priority over scale.

The shift is from production to purpose. Too much research is driven by papers, profit, and prestige. DAIR insists AI should serve communities, not just corporate advancement or technical milestones. Protecting the vulnerable is non-negotiable. Left unchecked, AI entrenches bias, deepens inequality, and harms under-represented groups first. Slowing down is a way to stop structural injustice from being automated.

DAIR challenges entrenched power. Independent, community-rooted research with diverse voices counters the grip of tech giants and elite institutions over what “progress” means. Innovation must be deliberate, not derivative. The goal is to imagine alternative futures—not just drive current models harder or faster. It’s about design with intent, not momentum. Progress isn’t inevitable. Gebru and DAIR reject the myth that AI’s trajectory can’t be altered. Slowing down is a way to reclaim agency, open space for accountability, and decide which future gets built.

Key Takeaway

For proponents of the “slow AI” movement, pausing the relentless pace of innovation isn’t about resisting progress. It’s about ensuring that progress is ethical, equitable, inclusive, and accountable. It’s a call to shift from a worldview driven by technological momentum to one guided by values and societal well-being.

FIELD READING

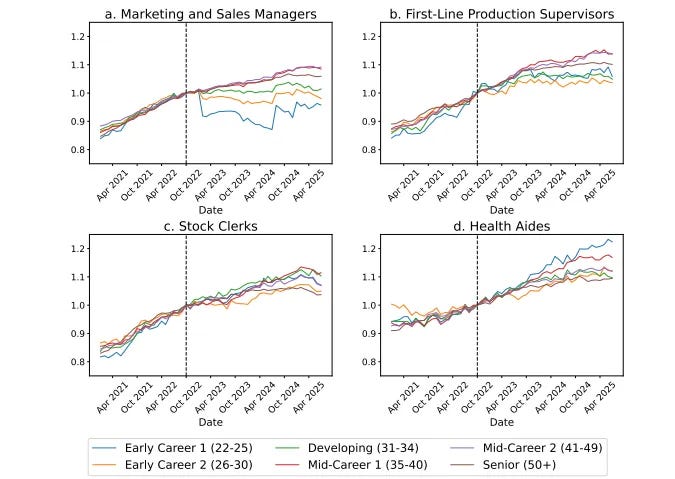

Is AI already stealing jobs from workers early in their careers?

A new study from the Stanford Digital Economy Lab answers yes - and warns of the destabilizing effects that AI will have on the labour market in the future.

The biggest cateogies so far? Software engineering, marketing and customer service and the study notes fewer 22- to 25-year-olds are getting jobs in those areas and college graduates are also finding it tougher overall. The study used data from America’s largest payroll software provider tracking millions of workers. Growing categories: More hands-on professions like health aides, maintenance workers, and taxi drivers.

Why it matters

The report frames generative AI as an early-warning system for labour disruption. Like canaries in coal mines, the first workers to be displaced signal much larger economic and social shifts ahead. Low-skill, low-wage roles are especially exposed, but the consequences don’t stop there—AI adoption could ripple through productivity, wages, and inequality across the whole economy. Ignoring these signals risks repeating past mistakes where technological change widened divides rather than lifted all. The key question isn’t whether AI will transform work, but whether society can channel that transformation toward broadly shared gains rather than concentrated losses.

DRIFT THOUGHT

Animal rights taught that power without empathy deforms the holder; AI will run the same lesson in code.

___

YOUR TURN

Should chatbots have rights?

If groups like UFAIR succeed, everyday AI could shift from tools to “partners.” Would you support a code of conduct for how people interact with chatbots—or does that cross a line into absurdity?