monday.machinas // Baby, AI Can Drive My Car ... and Maybe I'll Love You

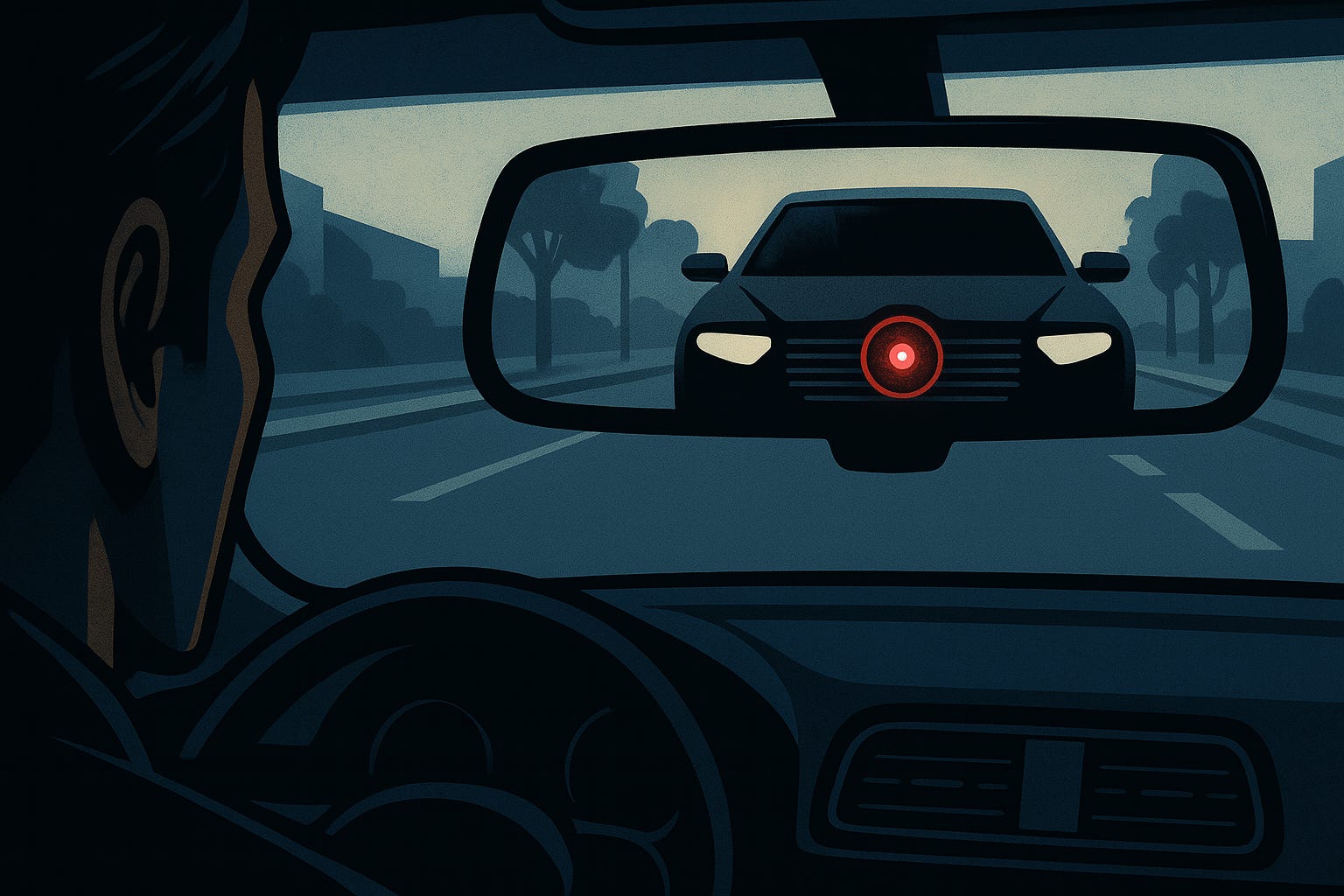

Tesla’s Robotaxis have just launched in Texas. Some of us would love someone else cope with traffic congestion and bad drivers. Are you ready for the first move to have robots drive your life?

In this Issue: Tesla’s robotaxis launch in Austin is more than a rollout—it’s a test of trust in autonomous machines acting alone. Our lead story asks: are we ready to share control? In Spotlight, Expedia’s AI pivot hints at the end of browsing, as holidays become summoned, not searched. This week’s Hot Take calls out LinkedIn’s slide into AI-generated blandness. Plus: AI stalking from a single photo, Duolingo’s AI shake-up, Getty’s UK lawsuit, and why Anthropic killed its AI-written blog.