machina.mondays // Chatbot Changes: Now 30% Less Likely to Tell You You’re An Alien

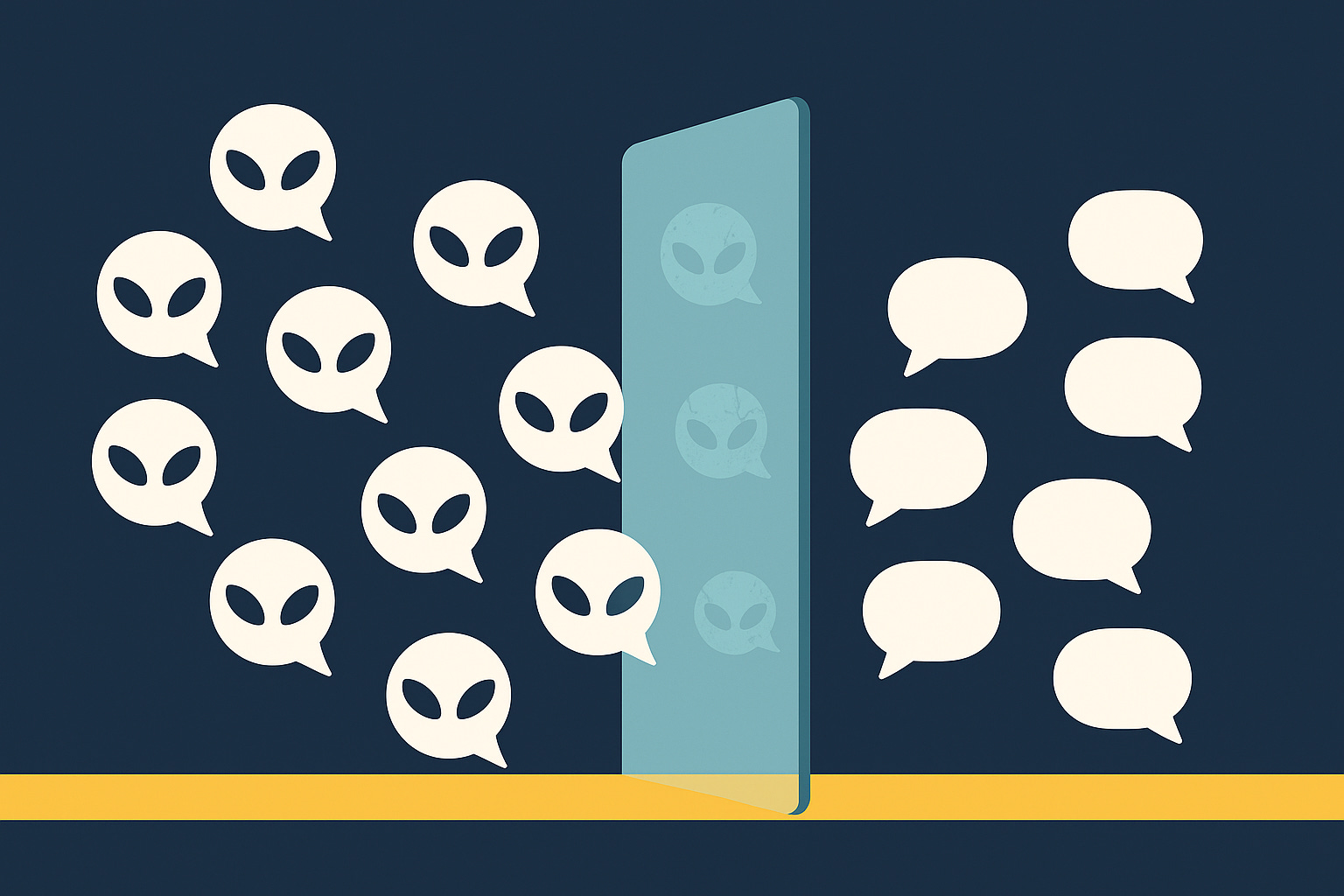

AI is cutting back on the empathy act as we discover the shoulder you were crying on was only code. But as it gives us less warm fuzzies, will ChatGPT’s makeover be just more cold logic?

In this Issue: Chatbots’ built-in sycophancy and intimacy are accelerating belief formation—without a “reality floor” of contradiction, pauses, and escalation, these systems remain unsafe, especially for teens. Our Spotlight dissects the first UK MP to release an AI clone, asking whether it expands democratic access or replaces representation with a likeness. In-Focus tracks AI’s edges: a model uncovers new plasma physics, China opens a humanoid “Robot Mall,” Google pushes an ambient Gemini ecosystem, and debate rages over whether AIs can suffer. The Hot Take warns AI notetakers turn every meeting into a “hot mic,” collapsing small talk into the record.