machina.mondays // No Suits, Just Circuits: The New Mad Men

From digital twins to self-driving campaigns, AI has left the back office — it’s on the billboard. The human–machine line is shifting, and the next Mad Men might not even be men.

In this Issue: AI advertising moves from helper to maker, with platforms piloting self-driving campaigns and agencies shifting to guardrails over assets. Spotlight dissects Elon Musk’s “limbic one-shot” claim, contrasting emotional capture with economic reality. In-Focus covers YouTube’s quiet AI edits and the consent gap. We track bot-led hiring, a wobbling AI market as projects flop, and ask if AI-made culture enriches creativity or dissolves shared experience. Perspectives include Sam Altman on the “dead internet” and Warner Bros. Discovery’s IP fight.

We Once Made the Ad. Now the Ad Makes Itself

Infinite Variants, Shrinking Patience: The New Economics of AI Ads and Advertising

The era of real-time creative has begun. AI isn’t just in the workflow—it is the workflow, rendering, testing, and iterating without pause. For agencies, the task shifts from crafting every piece to supervising the machine, building guardrails, and keeping brand voice intact as automation scales.

For three years agencies have kept a bright line. Use AI inside the building to brief, brainstorm, render, and resize. Do not ship AI as the work. That line is now under pressure. Platforms are moving to end-to-end automation, brands are piloting real-time formats, and clients want speed at lower cost. The question is not whether AI belongs in the workflow. It is who owns the last mile when AI starts making and serving the work in public.

Surveys show that nearly nine in ten video advertisers plan to use generative AI for creative, with many already doing so inside production workflows

The adoption shift from 2022 to 2025 is clear. In 2022, most agencies treated AI as an experiment. By 2025 it sits in the stack. Surveys show that nearly nine in ten video advertisers plan to use generative AI for creative, with many already doing so inside production workflows.1 Unilever’s use of digital product twins and an AI assisted studio is a clear signal of this internalisation. Product imagery that once required shoots can be generated twice as fast and at half the cost, while overall content production runs about 30 percent faster with large-scale varianting at launch.23 Internally, AI moved from novelty to utility. Qualitative work with Aotearoa New Zealand agencies found both excitement and concern about DALL·E 2’s capability and its impact on creative practice, reinforcing this transition4.

The bright line of AI inside and human out is fraying. Agencies have largely kept a boundary between using AI to help make work and releasing fully AI-generated ads as the work. That stance is beginning to strain. WPP’s Production Studio, built with NVIDIA’s Omniverse, aims to deliver exponentially more content by threading generation, adaptation, and performance feedback into one loop that travels from idea to asset to placement.5 Once systems can create and adapt at this cadence, the practical distinction between AI-assisted and AI shipped narrows. The same research surfaced a persistent human versus machine tension that maps to this boundary debate.6

Pressure on that line is coming from platform automation. Meta’s trajectory is explicit. By 2026, the platform aims to let brands upload inputs and have AI generate the ads, select placements, allocate budget, and iterate on creative at scale. In other words, the campaign becomes self-driving for many advertisers.7 The value question shifts. If the AI platform manufactures a thousand variants and runs the tests, the agency’s role becomes setting constraints, governing brand and auditing outcomes. That is a different commercial posture than making every unit by hand.

AI is moving beyond asset generation into layout, format adaptation, and variant management.

Real-time creative is moving from static to situational. Brands are piloting context-aware executions that assemble or adapt assets on the fly. Retail examples show AI systems auto-generating layouts or copy that change with signals like weather or time of day. What was impractical at the manual scale is now an operational reality for dynamic screens and feed level slots. Out of home (OOH) is the obvious proving ground. Digital sites can read the moment and pull from approved libraries to create locally relevant work without leaving brand rails. In such systems no two surfaces need show the same ad for long.

Layout automation and versioning are becoming core craft. AI is moving beyond asset generation into layout, format adaptation, and variant management. WPP’s platform illustrates the direction: generate multilingual visuals and copy, auto-tailor for channel, and link performance signals so weak units are replaced quickly.8 The craft shifts from producing a handful of masters to designing component libraries and rules that scale.

A two tier market is emerging. Platform automation lowers barriers for small and mid sized advertisers. IAB finds adoption strongest around video, where generative tools create usable outputs for non-specialists, while the report notes SMBs leaning on tools like Canva and AdCreative.ai to make acceptable work without agencies.9 At the top tier, agencies continue to matter for orchestration, brand systems, distinctive ideas, and high-stakes moments. The squeeze is in the middle where budgets are tight and speed wins. That is where the line breaks first.

The sacred cow at risk is the insistence on human only public creative. Insisting that all public-facing creative must be human-made will become untenable once clients expect real-time learning across thousands of variants. The meaningful distinction will be between human-led systems and ungoverned automation, not between human and machine at the level of a single asset. Unilever’s assembly line approach points to how craft evolves. The concept is the control set. The art is supervision and selection at scale.10 11 Focus group participants anticipated role shifts toward these supervisory modes as a likely outcome of computational creativity in the workflow.12

OOH’s second act is beginning. One scenario from the discussion is that out-of-home becomes a premium battleground as personal concierge AIs filter or mute in-home ads. Street-level screens and installations are harder to block. Dynamic OOH that bends to local signals without breaking brand rules is a credible path, and early retail use cases show the underlying mechanics already in market. The Minority Report image is not a prophecy. In the film Tom Cruise runs through a mall where ads scan his eyes and call his name, changing in real time to his profile. This scene illustrates how AI-powered dynamic out-of-home is moving in the same direction: hyper-contextual, adaptive displays that feel personal in the moment. It is a product roadmap — a shorthand for hyper-personalised, dynamically generated media experiences that once felt futuristic but are now operational goals for platforms and brands.

Risks and guardrails must be addressed. Automation at scale also fails at scale. Meta’s Advantage+ misfire in 2023 and 2024 drained budgets for some small advertisers before fixes were applied, illustrating why spend governors, rollback paths, and real support are not extras. They are table stakes for trust when the system is self-tuning.13 On the creative side, public response to obvious synthetics is mixed. Skinny’s AI cloned ambassador in New Zealand landed as canny and disquieting in equal measure. Disclosure and tone matter more than novelty. Label what is synthetic when it matters and keep a human editorial ear on the work.14

What agencies should do next is treat platform automation as baseline and build edges above it. Treat platform automation as the baseline. Build edges above it.

Live systems design. Component libraries, layout logic, and rules that travel across placements so dynamic executions remain recognisably on brand.

Platform governance. Safety rails, budget guards, human veto point,s and transparent reporting that make opaque automation legible and controllable for clients.

Cultural invention. Platforms can fill the grid. Agencies still win when they create the moment people talk about.

The Bottom line:

AI has moved from backroom helper to frontline producer. The winners will not be those who hold the line against shipping AI. They will be the teams that design the systems, set the constraints, and make the call on what ships. In that world, taste, rules, and recovery matter as much as rendering.

When platforms promise infinite variants, will brands still value the one idea that cuts through—or will speed and volume win outright?

PERSPECTIVES

I never took the dead internet theory that seriously, but it seems like there are really a lot of LLM-run twitter accounts now

– Sam Altman, Sam Altman Says He's Suddenly Worried Dead Internet Theory Is Coming True, Futurism

Evidently, Midjourney will not stop stealing Warner Bros. Discovery’s intellectual property until a court orders it to stop

– Warner Bros. Discovery legal team, Warner Bros. sues Midjourney for 'purposefully infringing copyrighted work'

SPOTLIGHT

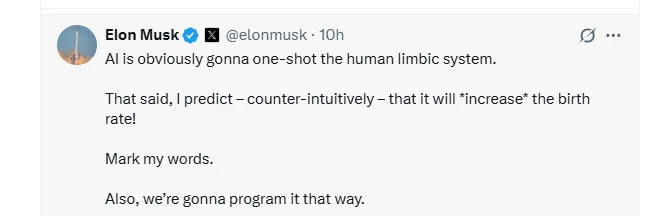

Elon Musk Warns That AI Is "Obviously Gonna One-Shot the Human Limbic System"

Elon Musk’s latest prophecy—that AI will “one-shot the human limbic system” yet somehow raise birth rates because we’ll “program it that way”—meets a cold splash of reality in this sharp Futurism piece. It unpacks the contradiction between rising intimacy with chatbots (including Musk’s own Grok) and the well-documented economic forces that suppress fertility in wealthy societies. Along the way, it probes Musk’s increasingly erratic public behaviour—right down to his fixation on an NSFW bot avatar—and asks whose limbic system is really getting one-shotted. If you want a witty, sceptical takedown of tech messianism colliding with demography 101, this is the read. (via Futurism)

___

» Don’t miss our SPOTLIGHT analysis—the full breakdown at the end

IN-FOCUS

YouTube secretly used AI to edit people's videos. The results could bend reality

YouTube has been quietly applying AI “enhancements” to some Shorts—sharpening skin, unblurring and denoising—without asking creators, leaving subtle artefacts that musicians Rick Beato and Rhett Shull say misrepresent their work and risk eroding audience trust. YouTube frames it as routine machine-learning akin to smartphone processing, but critics argue the label downplays the AI mediation now inserted between creators and viewers. From Netflix’s messy AI remasters to phone features that fabricate “best takes,” the piece probes a larger shift: when platforms edit by default, consent blurs and our sense of what’s real online starts to warp. (via BBC)

» QUICK TAKEAWAY

YouTube reportedly applied AI “enhancements” to some videos without creators’ consent—things like wrinkle-smoothing and sharpening. That’s cosmetic post-processing, not content changes, so the immediate harm is limited. The real issue is transparency: quietly pre-processing what audiences see normalises platform-level alteration and risks subtle distortions or glitches. If edits ever move from polish to altering substance, it becomes a trust problem. Baseline: disclose, allow opt-outs, and log what’s been changed.

Your next job interview might be with an AI bot. Here's how to ace it.

Hiring is moving to the machines: 8 in 10 employers now use AI to scan CVs, 40% deploy chatbots with candidates, and about a quarter run AI-led interviews — with more adding them this year. Driven by a surge in applicants and layoffs, bots are screening first, even as only 25% of jobseekers trust AI to be fair and most prefer in-person. Experts say AI can speed early filtering but can’t probe deeply, so human judgment still matters. Expect short, recorded Q&A on platforms like Ribbon AI, especially for hard-skill roles. (via Yahoo Finance)

‘It’s almost tragic’: Bubble or not, the AI backlash is validating what one researcher and critic has been saying for years

Sam Altman’s ‘bubble’ talk and an MIT finding that 95% of generative-AI pilots flop have shaken market faith, echoing Gary Marcus’s long-running critique that LLMs are hitting limits. Fortune maps the vibe shift—from an underwhelming GPT-5 and a $750bn data-centre splurge to Eric Schmidt’s AGI doubts—while Wall Street splits between promised $920bn efficiencies and looming ‘capex indigestion’. Marcus argues valuations are detached from revenues and propped up by our tendency to anthropomorphise the tech. A sharp read on hype versus hard numbers. (via Fortune)

HOT TAKE

A.I. Is Coming for Culture

Algorithms already shape our days, but Joshua Rothman asks what happens when machines also generate the culture itself. From “A.I. slop” and dead-internet vibes to DIY, A.I.-made podcasts and sketch shows, he charts a flood of automated creativity—and Jaron Lanier’s warning that personalised “live synthesis” could replace shared culture with bespoke illusions. The essay’s centre of gravity is human: a museum visit, a podcast’s real voices, a spouse’s unscripted take—reminding us that trust, community, and originality are made between people. If A.I. is rewiring attention, we’ll need to fix our stories to keep culture common. (via The New Yorker)

» OUR HOT TAKE

The rise of AI-driven cultural production promises both expansion and distortion: it could unlock entirely new forms by dissolving boundaries between art, music, film, and text, but it also risks degrading culture into an endlessly self-replicating loop of synthetic pastiche. On one side, optimists imagine hubs where personalised cultural streams flow seamlessly—AI playlists that are never repeated, podcasts that morph in real time, films spun from prompts rather than studios. On the other, critics warn of a “toxic dump” effect, where culture cannibalises itself until meaning is lost and shared experience collapses into isolated, algorithmically tailored illusions. The tension lies in whether this future becomes an enrichment—humans and machines remixing one another in a creative back-and-forth—or a monoculture that undermines originality and corrodes the communal fabric that culture has always required.

FINAL THOUGHTS

If every billboard knows your name, anonymity might be the last luxury left

___

FEATURED MEDIA

New MIT study says most AI projects are doomed...

A new MIT study says that 95% of corporate GenAI projects have failed, Meta is pulling back on its AI spend, and tech markets are getting nervous. Is the AI bubble starting to pop?

The models are definitely smart enough. It’s just the humans suck at using them. It’s nothing but a skill issue.

Meta freezes AI hiring just weeks after a talent spree, while an MIT study finds 95% of enterprise AI projects fail to boost revenue. The research tracks $30–$40bn in deployments and blames brittle workflows, poor context, and bad rollouts rather than model weakness, with in-house builds faring worst. There are outliers (one CEO claims 75% margins after replacing most developers), but the signal is clear: operational excellence beats hype.

Justin Matthews is a creative technologist and senior lecturer at AUT. His work explores futuristic interfaces, holography, AI, and augmented reality, focusing on how emerging tech transforms storytelling. A former digital strategist, he’s produced award-winning screen content and is completing a PhD on speculative interfaces and digital futures.

Nigel Horrocks is a seasoned communications and digital media professional with deep experience in NZ’s internet start-up scene. He’s led major national projects, held senior public affairs roles, edited NZ’s top-selling NetGuide magazine, and lectured in digital media. He recently aced the University of Oxford’s AI Certificate Course.

⚡ From Free to Backed: Why This Matters

This section is usually for paid subscribers — but for now, it’s open. It’s a glimpse of the work we pour real time, care, and a bit of daring into. If it resonates, consider going paid. You’re not just unlocking more — you’re helping it thrive.

___

SPOTLIGHT ANALYSIS

This week’s Spotlight, unpacked—insights and takeaways from our team

Limbic Leverage: AI, Intimacy, and the Birth‑Rate Claim

Elon Musk’s latest provocation: that AI will “one‑shot the human limbic system” and, because we’ll “programme it that way”, lift birth rates, bundles a real capability (precision emotional influence) with a result that doesn’t follow (more babies). The claim lands because it feels technologically neat. If you can trigger desire at scale, surely you can engineer reproduction. But fertility is not a UX funnel. It is a years‑long negotiation with economics, time, health, culture, and care.

Start with the “limbic one‑shot”. Strip away the theatrics and it describes systems that read a user, profile their triggers, and feed stimuli that heighten arousal and attachment. We already see this in companion apps and conversational agents that mirror tone, escalate intimacy, and reward attention. These tools can grip emotion. What they cannot do on their own is convert a dopamine spike into a durable partnership, a shared household, and a willingness to assume the costs and risks of parenting. Affect is not action.

This is where the current market reality bites. AI companions, from Replica to Character.ai to the new Grok personas, are surging precisely because they remove friction. They deliver attention and pseudo‑intimacy on demand, without the awkwardness, compromise, and time cost of human courtship. For many users, that convenience displaces, not prepares, the work of meeting, dating, and committing to another person. Whatever marginal gains AI might create in matching compatible humans are offset by the ease of parasocial attachment that keeps people on screens. Substitution, not settlement, is the present‑day trend.

Even if we grant the optimistic reading: that AI could make people feel more inclined toward sex and partnership, the structural obstacles reassert themselves. In high‑income countries, births track housing affordability, childcare access, workplace penalties (especially for women), medical costs, and the availability of extended support. Those are material constraints, not motivational gaps. Increasing desire does little if couples cannot afford a bigger flat, find a nursery place, or take paid leave without career damage. Demography remains stubbornly economic.

Set against this, Musk’s worldview supplies a motive: a long‑standing preoccupation with declining Western fertility and a telos of becoming a multi‑planetary species. Seen through that lens, pro‑natalist rhetoric is not a loose tweet but a strategic narrative: more people for Mars, more settlers, more talent for the projects he champions. Yet that framing raises an uncomfortable question: whose fertility are we trying to increase? Global population growth persists outside the wealthiest nations. When attention fixates on boosting births in affluent countries, it can shade into selective concern. The risk is not hypothetical. Data‑driven targeting has a habit of reproducing class, race, and disability biases under the banner of “neutral optimisation”.

That leads to the ethical line most at risk of being crossed. Designing systems specifically to excite, attach, and steer reproductive choices is not a harmless product experiment. It is population policy by interface. Consent becomes murky when the objective function sits with platforms or politicians. For minors and vulnerable users, it veers towards coercion. And absent parallel investment in healthcare, childcare, housing and leave, a surge in pregnancies would not look like a civilisational rescue. It would look like deepening inequality and avoidable harm.

There is a narrow, defensible path where AI helps without commandeering choice. Companion systems can be designed as bridges, not replacements. Features that nudge users towards offline events, impose time‑outs on erotic escalation, prioritise safety and age‑gating, and actively hand off to human‑to‑human contexts. Matching engines can reduce search costs for compatible partners. Planning tools can coordinate appointments, benefits, and childcare logistics. But none of this moves the macro needle unless governments meet the technology with material policy, such as affordable housing, universal childcare, paid parental leave, and reproductive healthcare you can actually access. Without that floor, “programme it that way” is empty bravado.

In short, the limbic‑lever fantasy collapses under contact with reality. Yes, AI can tune emotion with unnerving precision. No, that does not make it a demographic joystick. What we are really testing here is governance: who sets the targets for intimate life, what guardrails constrain platforms that can shape it, and how we prevent selective, euphemised eugenics from riding in under the cover of optimisation. Until those questions are answered in public, by policy, the safest rule is simple. Build for connection, not control.

Key Takeaways

Emotional capture is real; fertility remains primarily governed by economics, time, and care infrastructure in high‑income societies.

Today’s companion AIs tend to substitute for, not catalyse, human pair formation; any pro‑natalist effect would be marginal at best.

Turning persuasion into population policy crosses ethical lines, especially for minors and vulnerable users, and invites coercion.

The only credible role for AI is as a bridge to human relationships and a reducer of logistical friction, paired with housing, childcare, and paid leave.

Optimising births via platforms risks selective targeting and bias; democratic oversight, transparency, and hard safety gates are non‑negotiable.

Interactive Advertising Bureau. (2025). Nearly 90% of advertisers will use Gen AI to build video ads, according to IAB’s 2025 Video Ad Spend & Strategy Full Report. https://www.iab.com/news/nearly-90-of-advertisers-will-use-gen-ai-to-build-video-ads/

Unilever. (2025). Unilever reinvents product shoots with AI for faster content creation. https://www.unilever.com/news/news-search/2025/unilever-reinvents-product-shoots-with-ai-for-faster-content-creation/

Digiday. (2025). Inside Unilever’s AI beauty marketing assembly line and its implications for agencies. https://digiday.com/marketing/inside-unilevers-ai-beauty-marketing-assembly-line-and-its-implications-for-agencies/

Matthews, J., Fastnedge, D., & Nairn, A. (2023). The future of advertising campaigns: The role of AI-generated images in advertising creative. Ubiquity: The Journal of Pervasive Media, 8(1), 29–49. https://doi.org/10.1386/jpm_00003_1

Marketing Dive. (n.d.). WPP promises brands ‘exponentially more content’ with AI Production Studio. https://www.marketingdive.com/news/wpp-production-studio-nvidia-generative-ai-cannes-lions/719620/

Matthews et al, The future of advertising campaigns: The role of AI-generated images in advertising creative

Content Grip. (n.d.). Meta AI ads by 2026: what marketers need to know. https://www.contentgrip.com/meta-ai-ad-generation-2026-plan/

Marketing Dive, WPP promises brands ‘exponentially more content’ with AI Production Studio.

Interactive Advertising Bureau, Nearly 90% of advertisers will use Gen AI to build video ads, according to IAB’s 2025 Video Ad Spend & Strategy Full Report.

Unilever, Unilever reinvents product shoots with AI for faster content creation.

Digiday, Inside Unilever’s AI beauty marketing assembly line and its implications for agencies.

Matthews et al, The future of advertising campaigns: The role of AI-generated images in advertising creative

eMarketer. (n.d.). Meta faces backlash as automated ad system drains budgets with little payoff. https://www.emarketer.com/content/meta-faces-backlash-automated-ad-system-drains-budgets-with-little-payoff

The Spinoff. (2025, March 25). Help: I’m being haunted by the Skinny AI ad. https://thespinoff.co.nz/pop-culture/25-03-2025/help-im-being-haunted-by-the-skinny-ai-ad